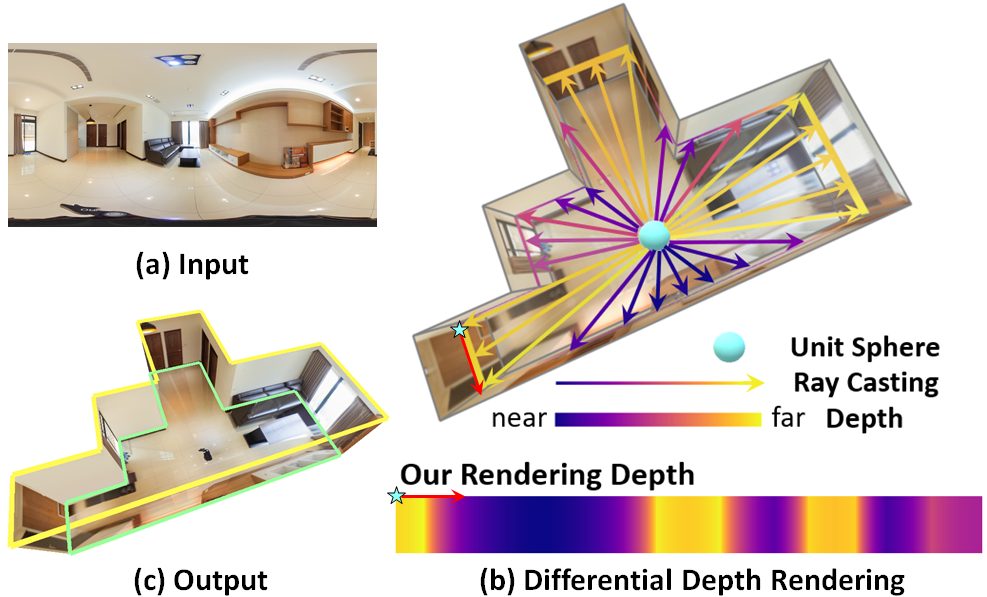

LED2-Net: Monocular 360˚ Layout Estimation via Differentiable Depth

Fu-En Wang*, Yu-Hsuan Yeh*, Min Sun, Wei-Chen Chiu, Yi-Hsuan Tsai

@InProceedings{Wang_2021_CVPR,

author = {Wang, Fu-En and Yeh, Yu-Hsuan and Sun, Min and Chiu, Wei-Chen and Tsai, Yi-Hsuan},

title = {LED2-Net: Monocular 360deg Layout Estimation via Differentiable Depth Rendering},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {12956-12965}

}